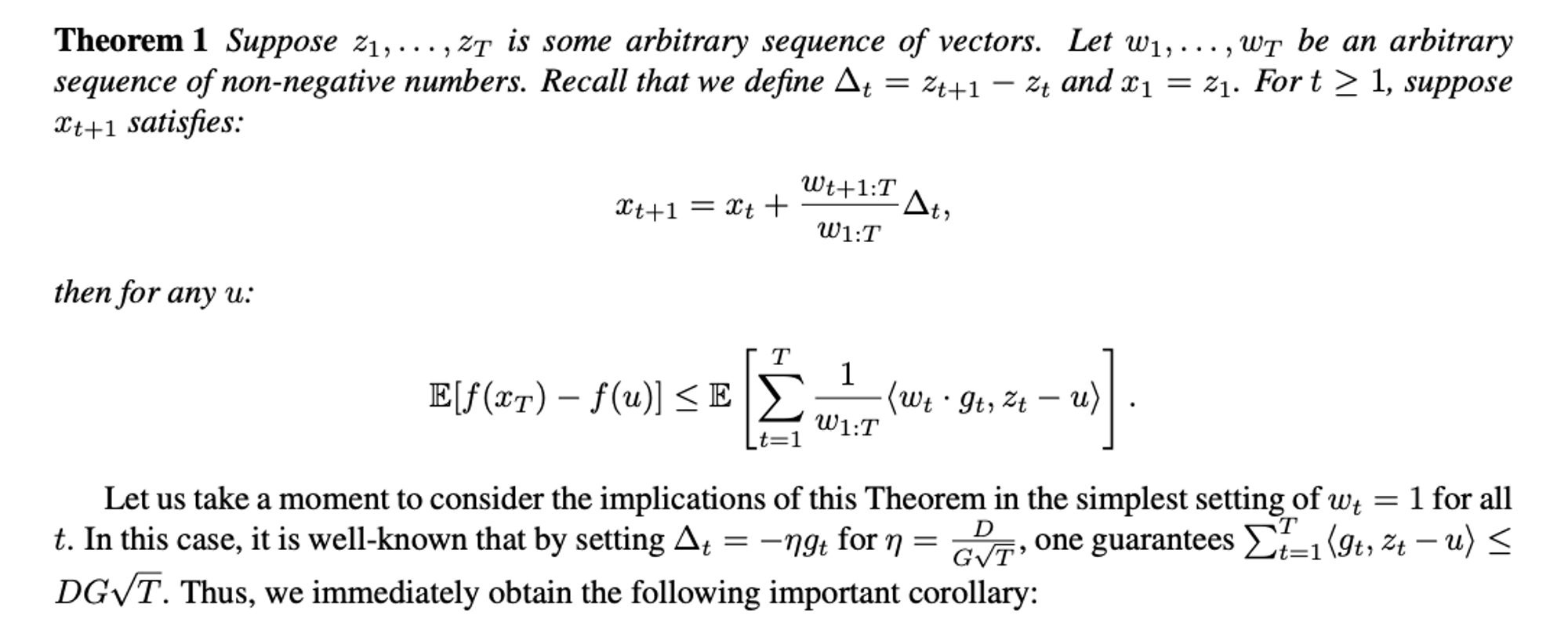

Main Analytical Result

My interpretation of the variables:

- : weights (what they call “iterates” over time)

- : w_updates * -LR (but not schedule for LR)

- : difference in consecutive s

- : grads (=w_updates for SGD)

- : expected max grad across time

- : schedule weights (not model weights)

- : the function to be minimised

- : some arbitrary weight that we hope is optimal (i.e. minimises )

- the distance from the first iterate to the optimal one

Hence what Theorem 1 tells us is that if we have a series of optimiser outputs () where there is some bound on , we can produce a series of weights (iterates) where the final one gives an which is bounded wrt. the optimal one.

The specific example they give is that SGD with the regret is bounded by